AI

The keras package in R can be used to classify images

using convolutional neural networks (CNNs). These are machine learning

models having successive layers of representation of image or other

spatial data. The “deep” in deep learning refers to this layering

(deeper = more layers).

It is a good idea to refresh your memory on how CNNs work. I can recommend a couple of youtube videos for this: 3Blue1Brown and Brandon Rohrer.

Instructions below use the MNIST data set, which is a database of images of handwritten integer single digits 0-9. I include an example of a CNN to identify the digit from an image.

Read me

The R keras package is an interface to the Python

packages Keras and TensorFlow. To run, you can

either install all the packages on your own computer or use an online

platform in your browser.

Option 1: Use Kaggle

Kaggle is an online platform for data science and machine learning.

You can run R code in a Kaggle notebook. The keras and

other needed packages are pre-installed.

You’ll need to set up a free account at Kaggle using your browser.

Then open a notebook.

Click the blue “+” symbol at top left to create a new notebook.

In the right pane of the notebook page, scroll down to “Session options” and select “R” as the language.

To enter R code, click +Code button in the notebook and type your commands inside the box. To run, click the little triangle to the left of the box.

To run the example data set on this R tips page, you will also need to add the pre-built data set to your notebook.

- In the right pane of your notebook, click “Add input” and select “Datasets”.

- Search for “mnist_numpy”.

- Scroll down and find “mnist.npz” by Vikram Tiwari

- Click the “+” button to add it.

To run, enter

library(keras)

library(reticulate)

library(tensorflow)

library(imager)Option 2: Use your own computer

The installation is required only once. R 4.5 uses the

keras3 package (use keras instead if you’re

still using R 4.4 or earlier). The following commands worked for me on a

Mac M2 running OS 15.6.1. Do they work for you?

install.packages("keras3")

library(keras3)

library(reticulate)

install_keras()

install.packages("imager")To run, enter

library(keras3)

library(imager)Example data set

For this example, we’ll use the MNIST data set, which consists of a large number of grayscale images of handwritten digits 0 to 9. The images are 28 x 28 pixels in size and are encoded as 3D arrays of numbers.

The data set is divided into a training set of 60,000 images and a test set of 10,000 images.

Read the data

Read the data into an R object.

If you are using Kaggle, you’ll need to read the data from the local file you added using the command above.

# Kaggle only

mnist <- dataset_mnist(path = "/kaggle/input/mnist-numpy/mnist.npz")If you are on your own computer, you can grab from the internet as follows.

# Local computer only (will take a minute)

mnist <- dataset_mnist()Extract the data

Then pull out the training and testing data. The training data will be used to fit the CNN model, and the test data set will be used to evaluate the accuracy of the model.

The objects x_train and x_test are arrays

containing the images, whereas y_train and

y_test are vectors that indicate the known (correct) digit

corresponding to each image.

# Extract image arrays

x_train <- mnist$train$x

x_test <- mnist$test$x

# Extract classifications

y_train <- mnist$train$y

y_test <- mnist$test$yArrays of images

The objects x_train and x_test are

3-dimensional arrays. Each image is represented as a 28 x 28 matrix of

numbers and is stored in the second and third dimensions of the array

with corresponding index. A matrix is just a 2D array.

To see the dimensions of the array, enter

dim(x_train)## [1] 60000 28 28dim(x_test)## [1] 10000 28 28The output means that x_train contains 60,000 images

each 28 x 28 pixels. x_test contains 10,000 images, also 28

x 28 pixels. The first dimension of the arrays index the images.

A grayscale image is typically represented by pixels containing numbers between 0 through 255. A black pixel is 0, whereas a white pixel has the value 255. Numbers in between are gray.

To see how the first image in the array is represented, type the following. If your output window was wide enough to show all 28 columns, you would see that the non-zero numbers seem to trace a handwritten digit “5”.

x_train[1, , ]## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11] [,12] [,13]

## [1,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [2,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [3,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [4,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [5,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [6,] 0 0 0 0 0 0 0 0 0 0 0 0 3

## [7,] 0 0 0 0 0 0 0 0 30 36 94 154 170

## [8,] 0 0 0 0 0 0 0 49 238 253 253 253 253

## [9,] 0 0 0 0 0 0 0 18 219 253 253 253 253

## [10,] 0 0 0 0 0 0 0 0 80 156 107 253 253

## [11,] 0 0 0 0 0 0 0 0 0 14 1 154 253

## [12,] 0 0 0 0 0 0 0 0 0 0 0 139 253

## [13,] 0 0 0 0 0 0 0 0 0 0 0 11 190

## [14,] 0 0 0 0 0 0 0 0 0 0 0 0 35

## [15,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [16,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [17,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [18,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [19,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [20,] 0 0 0 0 0 0 0 0 0 0 0 0 39

## [21,] 0 0 0 0 0 0 0 0 0 0 24 114 221

## [22,] 0 0 0 0 0 0 0 0 23 66 213 253 253

## [23,] 0 0 0 0 0 0 18 171 219 253 253 253 253

## [24,] 0 0 0 0 55 172 226 253 253 253 253 244 133

## [25,] 0 0 0 0 136 253 253 253 212 135 132 16 0

## [26,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [27,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [28,] 0 0 0 0 0 0 0 0 0 0 0 0 0

## [,14] [,15] [,16] [,17] [,18] [,19] [,20] [,21] [,22] [,23] [,24] [,25]

## [1,] 0 0 0 0 0 0 0 0 0 0 0 0

## [2,] 0 0 0 0 0 0 0 0 0 0 0 0

## [3,] 0 0 0 0 0 0 0 0 0 0 0 0

## [4,] 0 0 0 0 0 0 0 0 0 0 0 0

## [5,] 0 0 0 0 0 0 0 0 0 0 0 0

## [6,] 18 18 18 126 136 175 26 166 255 247 127 0

## [7,] 253 253 253 253 253 225 172 253 242 195 64 0

## [8,] 253 253 253 253 251 93 82 82 56 39 0 0

## [9,] 253 198 182 247 241 0 0 0 0 0 0 0

## [10,] 205 11 0 43 154 0 0 0 0 0 0 0

## [11,] 90 0 0 0 0 0 0 0 0 0 0 0

## [12,] 190 2 0 0 0 0 0 0 0 0 0 0

## [13,] 253 70 0 0 0 0 0 0 0 0 0 0

## [14,] 241 225 160 108 1 0 0 0 0 0 0 0

## [15,] 81 240 253 253 119 25 0 0 0 0 0 0

## [16,] 0 45 186 253 253 150 27 0 0 0 0 0

## [17,] 0 0 16 93 252 253 187 0 0 0 0 0

## [18,] 0 0 0 0 249 253 249 64 0 0 0 0

## [19,] 0 46 130 183 253 253 207 2 0 0 0 0

## [20,] 148 229 253 253 253 250 182 0 0 0 0 0

## [21,] 253 253 253 253 201 78 0 0 0 0 0 0

## [22,] 253 253 198 81 2 0 0 0 0 0 0 0

## [23,] 195 80 9 0 0 0 0 0 0 0 0 0

## [24,] 11 0 0 0 0 0 0 0 0 0 0 0

## [25,] 0 0 0 0 0 0 0 0 0 0 0 0

## [26,] 0 0 0 0 0 0 0 0 0 0 0 0

## [27,] 0 0 0 0 0 0 0 0 0 0 0 0

## [28,] 0 0 0 0 0 0 0 0 0 0 0 0

## [,26] [,27] [,28]

## [1,] 0 0 0

## [2,] 0 0 0

## [3,] 0 0 0

## [4,] 0 0 0

## [5,] 0 0 0

## [6,] 0 0 0

## [7,] 0 0 0

## [8,] 0 0 0

## [9,] 0 0 0

## [10,] 0 0 0

## [11,] 0 0 0

## [12,] 0 0 0

## [13,] 0 0 0

## [14,] 0 0 0

## [15,] 0 0 0

## [16,] 0 0 0

## [17,] 0 0 0

## [18,] 0 0 0

## [19,] 0 0 0

## [20,] 0 0 0

## [21,] 0 0 0

## [22,] 0 0 0

## [23,] 0 0 0

## [24,] 0 0 0

## [25,] 0 0 0

## [26,] 0 0 0

## [27,] 0 0 0

## [28,] 0 0 0Classification vectors

The vectors y_train and y_test contain the

known digit corresponding to each image in the training and test data

sets. For example, here are the correct digits correponding to each of

the first few images in the training data set.

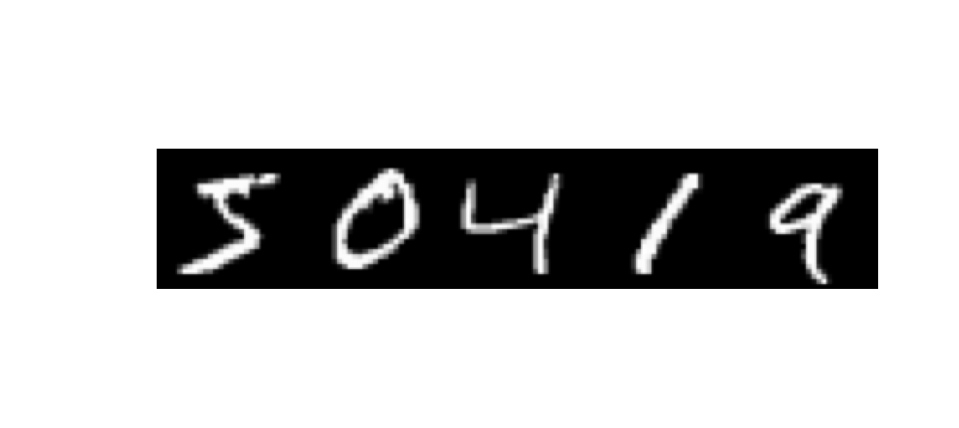

head(y_train)## [1] 5 0 4 1 9 2Process images

View images

To view an image with imager, enter the following. The

image is a “5” but it is transposed, a behavior of the

imager plot() function.

plot(as.cimg(x_train[1, , ]), axes = FALSE)

The matrix must therefore be transposed with t() to plot

correctly.

x1 <- t(x_train[1, , ])

plot(as.cimg(x1), axes = FALSE)

We can plot several images at once by binding the matrices.

x5 <- rbind(t(x_train[1,,]), t(x_train[2,,]),

t(x_train[3,,]), t(x_train[4,,]), t(x_train[5,,]))

plot(as.cimg(x5), axes = FALSE)

Reshape images

Building a CNN using R’s keras package requires that

images be represented with three dimensions, whereas our arrays have the

images coded as 28 x 28 pixel 2D matrices. We need to add a third

dimension, which will be a 1 for grayscale images.

x_train_reshape <- array_reshape(x_train, c(dim(x_train), 1))

dim(x_train_reshape)## [1] 60000 28 28 1x_test_reshape <- array_reshape(x_test, c(dim(x_test), 1))

dim(x_test_reshape)## [1] 10000 28 28 1The reason is that color images are normally represented as 3D arrays. An 8-bit RGB color image has 3 channels (R, G, and B), and so an RGB color image would be represented by three matrices of numbers, one matrix for each channel. For example, an RGB color image of 28 x 28 pixels would be stored in an array with dimensions c(28, 28, 3). Even though a grayscale image has just one channel, for consistency the CNN expects it to be represented with three dimensions, with the last dimension a 1 (rather than a 3 as for an RGB color image).

Rescale images

For the CNN, the grayscale numbers, which range from 0 (black) to 255 (white) need to be rescaled to range from 0 to 1.

x_train_rescale <- x_train_reshape / 255

range(x_train_rescale)## [1] 0 1x_test_rescale <- x_test_reshape / 255

range(x_test_rescale)## [1] 0 1Convert image classes

For the CNN, the classification vectors containing the true digits corresponding to each image must be converted to binary class matrices. The number of response classes is 10, i.e., all the integers from 0 to 9. A value of “0” is represented by the row vector of indicators c(1,0,0,0,0,0,0,0,0,0), i.e., a 1 to indicate the first class and 0 otherwise. The value “1” is indicated by c(0,1,0,0,0,0,0,0,0,0), the number “2” is c(0,0,1,0,0,0,0,0,0,0), and so on.

This is accomplished in R as follows.

# Number of categories

num_classes <- length(unique(y_train))

num_classes## [1] 10# Convert

y_train_matrix <- to_categorical(y_train, num_classes)

head(y_train)## [1] 5 0 4 1 9 2head(y_train_matrix)## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

## [1,] 0 0 0 0 0 1 0 0 0 0

## [2,] 1 0 0 0 0 0 0 0 0 0

## [3,] 0 0 0 0 1 0 0 0 0 0

## [4,] 0 1 0 0 0 0 0 0 0 0

## [5,] 0 0 0 0 0 0 0 0 0 1

## [6,] 0 0 1 0 0 0 0 0 0 0y_test_matrix <- to_categorical(y_test, num_classes)

head(y_test)## [1] 7 2 1 0 4 1head(y_test_matrix)## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

## [1,] 0 0 0 0 0 0 0 1 0 0

## [2,] 0 0 1 0 0 0 0 0 0 0

## [3,] 0 1 0 0 0 0 0 0 0 0

## [4,] 1 0 0 0 0 0 0 0 0 0

## [5,] 0 0 0 0 1 0 0 0 0 0

## [6,] 0 1 0 0 0 0 0 0 0 0y_train_matrix has 60,000 rows, one for each of the

60,000 images in the training data set. Similarly,

y_test_matrix has 10,000 rows, one for each image in the

test data set.

CNN model

We can think of the images of handwritten numbers as the predictors

(x variables) and the known digit categories corresponding

to each image (0-9) as the response (y) variables in a

model. We will build a CNN model that predicts the digit from the images

with low error rates.

Make CNN model

At this point the number of options is enormous. To get started I

looked at other analyses of this same data set online. I also asked

ChatGPT for help constructing a convolutional neural net in the R

keras package, describing the nature of the data. The

following model was not overly complicated and seemed to work reasonably

well.

The first line of the code initializes a sequential model

(my_model). Sequential models in keras are

linear stacks of layers. We create a model by successively adding layers

to this initial model object, as in the steps below. Each function call

takes my_model as the first argument and returns the

modified model, which is then reassigned to my_model.

# Initialize

my_model <- keras_model_sequential()

# Add layers

my_model <- layer_conv_2d(my_model, filters = 32, kernel_size = c(3, 3),

activation = 'relu', input_shape = c(28, 28, 1))

my_model <- layer_max_pooling_2d(my_model, pool_size = c(2, 2))

my_model <- layer_conv_2d(my_model, filters = 32, kernel_size = c(3, 3),

activation = 'relu')

my_model <- layer_max_pooling_2d(my_model, pool_size = c(2, 2))

my_model <- layer_flatten(my_model)

my_model <- layer_dense(my_model, units = 32, activation = 'relu')

my_model <- layer_dense(my_model, units = num_classes, activation = 'softmax')Some explanation

If you’ve watch the videos you’ll have an idea as to what these layers mean.

The first layer is a convolutional layer. The parameters specify that this layer will have 32 filters, each of size of 3x3 pixels. The activation function used is ‘relu’ (Rectified Linear Unit). The input_shape indicates the dimensions of the input images.

The second layer adds a max pooling layer to the model, which reduces the spatial dimensions (height and width) of the input volume for the next convolutional layer. The pool_size of c(2, 2) means that the max pooling operation is applied over 2x2 regions, reducing the size of the feature maps by a factor of 2.

The third layer is another 2D convolutional layer with the same configuration as the first one: 32 filters of size 3x3 with ‘relu’ activation.

The fourth layer is another max pooling layer similar to the previous max pooling layer. It further reduces the spatial dimensions of the feature maps, reducing the number of parameters needed in subsequent layers.

The fifth layer is a flattening layer. After extracting features through convolutional and pooling layers, this layer flattens the 3D feature maps into 1D, allowing them to be fed into the dense layers that follow.

The sixth layer is the first dense (fully connected) layer. It receives the flattened input and applies a ‘relu’ activation function across 32 units (or neurons). This layer enables the network to learn non-linear combinations of the high-level features extracted by the convolutional layers.

The seventh layer outputs the probability distribution over the target classes, with the number of units equal to the number of classes (num_classes). The ‘softmax’ activation function is used to convert the outputs to probabilities, ensuring that they sum up to 1. This layer essentially classifies the input image into one of the num_classes categories based on the features learned by the network.

Each step builds upon the previous to progressively construct a model that can classify images into one of several categories based on their visual content.

Model summary

Here is a compact summary of the model. The number of parameters being fitted seems ridiculously high, on the same order as the number of data points. Whether this is a cause for concern depends mainly on whether the model ends up overfitting the data, which is evaluated by its accuracy when applied to the test data after training.

summary(my_model)## Model: "sequential"

## ┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓

## ┃ Layer (type) ┃ Output Shape ┃ Param # ┃

## ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩

## │ conv2d (Conv2D) │ (None, 26, 26, 32) │ 320 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ max_pooling2d (MaxPooling2D) │ (None, 13, 13, 32) │ 0 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ conv2d_1 (Conv2D) │ (None, 11, 11, 32) │ 9,248 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ max_pooling2d_1 (MaxPooling2D) │ (None, 5, 5, 32) │ 0 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ flatten (Flatten) │ (None, 800) │ 0 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ dense (Dense) │ (None, 32) │ 25,632 │

## ├───────────────────────────────────┼──────────────────────────┼───────────────┤

## │ dense_1 (Dense) │ (None, 10) │ 330 │

## └───────────────────────────────────┴──────────────────────────┴───────────────┘

## Total params: 35,530 (138.79 KB)

## Trainable params: 35,530 (138.79 KB)

## Non-trainable params: 0 (0.00 B)Configure model

The model needs to be configured for training. Here we specify the

loss function (loss_categorical_crossentropy, suitable for

a response variable with multiple classes), the optimizer to be used (RMSprop)

and the metric used to evaluate the model during training and testing

(accuracy of classification).

my_model <- compile(my_model,

loss = loss_categorical_crossentropy,

optimizer = 'rmsprop',

metrics = c('accuracy'))Fit model

Finally, fit the model to the data. epochs sets to the

number of epochs to train the model. An epoch refers to one complete

pass of the entire training dataset through the CNN. More epochs result

in better fitting of the training data set, but too many can lead to

overfitting. The samples are passed through the model in batches of size

batch_size.

validation_split, if included, sets aside the indicated

proportion of samples and uses it to evaluate the metrics of fit at each

epoch. This is a useful way to track whether the model is overfitting

the data. Overfitting will be detected as a decline in the accuracy of

model predictions applied to the validation set even as the accuracy of

the model applied to the training data improves with increasing numbers

of epochs.

Your results will differ a little from those shown here because there are multiple sources of randomness in the training process (initialization of weights, the gradient descent process, the partitioning of training and validation sets, etc).

model_fit <- fit(my_model,

x_train_rescale, y_train_matrix,

batch_size = 128,

epochs = 8,

validation_split = 0.2)## Epoch 1/8

## 375/375 - 5s - 14ms/step - accuracy: 0.8949 - loss: 0.3534 - val_accuracy: 0.9697 - val_loss: 0.1079

## Epoch 2/8

## 375/375 - 5s - 13ms/step - accuracy: 0.9700 - loss: 0.0972 - val_accuracy: 0.9739 - val_loss: 0.0899

## Epoch 3/8

## 375/375 - 5s - 13ms/step - accuracy: 0.9797 - loss: 0.0651 - val_accuracy: 0.9808 - val_loss: 0.0647

## Epoch 4/8

## 375/375 - 6s - 15ms/step - accuracy: 0.9833 - loss: 0.0517 - val_accuracy: 0.9818 - val_loss: 0.0633

## Epoch 5/8

## 375/375 - 5s - 13ms/step - accuracy: 0.9872 - loss: 0.0410 - val_accuracy: 0.9850 - val_loss: 0.0520

## Epoch 6/8

## 375/375 - 5s - 13ms/step - accuracy: 0.9892 - loss: 0.0345 - val_accuracy: 0.9871 - val_loss: 0.0470

## Epoch 7/8

## 375/375 - 5s - 14ms/step - accuracy: 0.9904 - loss: 0.0291 - val_accuracy: 0.9843 - val_loss: 0.0586

## Epoch 8/8

## 375/375 - 5s - 13ms/step - accuracy: 0.9919 - loss: 0.0254 - val_accuracy: 0.9873 - val_loss: 0.0475Plot training history

Pay particular attention to the accuracy of the model applied to the validation set with increasing number of training epochs. It should rise and flatten. A decrease at later epochs will indicate overfitting, in which case you imght go back and try again with a simpler model.

plot(model_fit)

Model accuracy

See how accurate it is with the test data.

evaluate(my_model, x_test_reshape, y_test_matrix)## 313/313 - 1s - 2ms/step - accuracy: 0.9887 - loss: 6.4192## $accuracy

## [1] 0.9887

##

## $loss

## [1] 6.419201Use the model to predict the known response (digit) for each of the

test data images. y_test_probs will contain the

probabilities of image assignment to different digit classes.

y_test_pred will determine the single class with the

highest probability.

y_test_probs <- predict(my_model, x_test_rescale)## 313/313 - 1s - 2ms/stepy_test_pred <- max.col(y_test_probs, ties.method = "first") - 1Compare the known y-values (digits) in the test data set with the

model-predicted y-values (y_test_pred). You can see that

handwritten 7’s are sometimes mistakenly classified as 2’s, and

handwritten 8’s and 3’s are sometimes classified as 5’s.

table(y_test, y_test_pred)## y_test_pred

## y_test 0 1 2 3 4 5 6 7 8 9

## 0 971 1 2 0 0 1 2 2 1 0

## 1 0 1129 3 0 0 1 1 0 1 0

## 2 1 1 1027 2 0 0 0 1 0 0

## 3 0 0 0 1006 0 4 0 0 0 0

## 4 0 1 4 0 967 0 1 1 1 7

## 5 0 0 1 5 0 884 1 1 0 0

## 6 4 2 1 0 2 6 942 0 1 0

## 7 0 2 5 3 0 1 0 1016 0 1

## 8 4 0 4 3 0 3 0 0 958 2

## 9 1 2 3 3 1 9 0 7 0 983View some of the misclassified images. Here are a few of the test images of handwritten 7’s that were misclassified as 2’s.

z <- which(y_test == 7 & y_test_pred == 2)

z## [1] 1040 1227 1755 6663 9010plot(as.cimg(t(x_test[z[1], , ])), axes = FALSE)

Save model

Ignore the warning.

save_model_hdf5(my_model, "mymodel.h5")

# reload the saved model

my_model <- load_model_hdf5("mymodel.h5")© 2009-2026 Dolph Schluter