I have tested various SNP calling methods using exome re-sequencing data from 12 interior spruce samples. I tried Bowtie2, BWA (mem), Picard (mark duplicates) and GATK for indel realignment and base quality recalibration. For SNP calling I used mpileup with and without BAQ as well as the Unified Genotyper from GATK. For an interesting and informative workshop outlining the Broad best practices SNP calling pipeline check out these youtube videos (http://www.youtube.com/watch?v=1m0ZiEvzDKI&list=PLlMMtlgw6qNgNKNv5V9qmjAxbkHAZS1Mf). My results are in a series of blog posts and I hope you find them useful. Please let me know if you have any suggestions for SNP calling. We only want to do the alignments and SNP calling once for the entire set of samples, because it is going to take a long time!

Category Archives: Bioinformatics

Estimating Insert Sizes

We recently had some trouble estimating insert sizes with our Mate Pair (aka Jumping, larger insert sizes) Libraries. All the libraries sequenced by Biodiversity and the Genome Sciences Centre (GSC) were shockingly bad, but the libraries sequenced by INRA were very good. For example, according to the pipeline, the GSC 10kbp insert size library had an average 236bp insert size, but the INRA 20kb library an average insert size of 20630bp.

See the histogram for the 10kbp library:

GBS snp calling

One of the main ways we call SNPs in GBS data is using a maximum likelihood script derived from the original Hohenlohe stickleback RAD paper. It uses a likelihood function based on the number of reads for each base at a site to determine if its homozygous or heterozygous.

It’s been known for a while that it seemed to be biased against high coverage heterozygous sites. I took the script apart and realized that there was actually a serious problem with it. The likelihood function used factorials, and when there were over 175 reads at a site, perl broke down and started describing things as infinite. Then the script would call it as a homozygote by default.

Letting perl work with the huge numbers necessary makes it incredibly slow, so I put in a logic gate for high coverage sites. If there is over 150 reads at a site, and the second most numerous base makes up atleast 10% of the reads (i.e. at minimum 15), then it is heterozygous. Otherwise it is homozygous.

The rest of the script is the same. One could debate how best to call the high coverage scripts, but I suggest you use this script over the old one.

Filtering SNP tables

Previously I posted a pipeline for processing GBS data. At the end of the pipeline, there was a step where the loci were filtered for coverage and heterozygosity. How strict that filtering was could be changed, but it wasn’t obvious in the script and it was slow to rerun. I wrote a new script that takes a raw SNP table (with all sites, including ones with only one individual called) and calculates the stats for each loci. It is then simple and fast to use awk to filter this table for your individual needs. The only filtering the base script does is not print out sites that have four different bases called at the site.

Heterozygosity, read counts and GBS: PART 2

Subtitle: This time… its correlated.

Previously I showed that with the default ML snp calling on GBS data, heterozygosity was higher with high and low amounts of data. I then took my data, fed it through a snp-recaller which looks for sites that were called as homozygous but had at least 5 reads that matched another possible base at that position (i.e. a base that had been called there in another sample). I pulled all that data together, and put it into a single table with all samples where I filtered by:

C/C++ Dependency Troubleshooting

Unix C/C++ programs are very finicky about the compiler and library versions. Compiling is the process of translating human readable code into a binary executable or library that contains machine-friendly instructions. Linking is the process of telling multiple executables or libraries how to talk to each other.

gcc is a GNU C compiler that is typically used on unix systems. g++ is a GNU C++ compiler on unix systems. libstdc++ is the GNU standard C++ library. glibc is the GNU standard C library. When you install GCC on your unix machine, you are installing a package of the aforementioned items. The gcc unix command is smart enough to call either the gcc compiler or g++ compiler depending if you pass it C or C++ code.

If you attempt to run your program with an older standard library than it was originally linked it with, your program will crash and complain. Here are some tips to get around it.

C / C++ Troubleshooting

IN PROGRESS

Most of the high-performance bioinformatic programs are written in C or C++. Unfortunately, C and C++ code is some of the hardest code to debug. If you have only programmed casually in perl/python, you will not have a good time. Here are some tips to help you out, but you will most likely need someone with C / C++ programming experience and knowledge of the code to get you through it.

AWK unlinked SNP subsampling

For some analyses, like STRUCTURE, you often want unlinked SNPs. For my GBS data I ended up with from 1 to 10 loci on each contig which I wanted to subsample down to just one random loci per contig. It took me a while to figure out how to do this, so here is the script for everyone to use:

cat YOUR_SNP_TABLE | perl -MList::Util=shuffle -e ‘print shuffle(<STDIN>);’ | awk ‘! ( $1 in a ) {a[$1] ; print}’ | sort > SUBSAMPLED_SNP_TABLE

It takes you snp table, shuffles the rows using perl, filters out one unique row per contig using awk, then sorts it back into order. For my data, the first column is CHROM for the first row and then scaffold###### for the subsequent rows so the sort will place the CHROM row back on top. It might not for yours if you have different labels.

GBS, coverage and heterozygosity

I’m running some tests on my GBS data to look for population expansion. I know from looking at GBS data from an F1 genetic mapping population that for GBS data heterozygotes can be under called due to variation in amplification and digestions. Also, for my data observed heterozygosity is almost always under expected. Heterozygotes can also be overcalled when duplicated loci are aligned together. The tests I’m going to use explicitly use observed heterozygosity so this is worrying.

Text Editor Indenting & Highlighting

Do you hate reading code that is poorly indented or lacks syntax highlighting? Here are some common text editor fixes:

Continue reading

Batch Sequence Read Archive Submissions

Getting all of our data uploaded to the SRA is important. It is good to share our data publicly whenever possible, and just as importantly, it provides us with a free off-site backup of our raw data.

We keep a spreadsheet of the lab’s sequencing data here: http://bit.ly/17Z4X1P

*IMPORTANT*: The spreadsheet is curated by a few members of the lab and is not complete. Your data may not be listed here, if it is not, please do your best to add it. You can email me and Sebastien with any questions you might have.

I’ve added a column that indicates the submission status of each sample in the Sunflower Relatives and Wild Sunflowers tabs, so you can tell if your data has already been submitted.

Perl Troubleshooting

This is a collection of fixes for various issues I’ve had with Perl. Feel free to add any of your Perl tips here. I will move this to a wiki page if it gets too big.

All Perl scripts fail with error message “Compilation failed in require at…*.pm did not return true at …”

Unable to install packages on Debian with error message “Perl may be unconfigured”

How to Upload Files to Bitbucket (commandline)

BitBucket is an external source code repository server that hosts our shared code. Our repositories use Git as the version control program to keep track of file changes. The idea is you make changes to code on your local machine then share your code with everyone else by uploading to BitBucket.

The instructions below guide you step-by-step in uploading files to BitBucket using the commandline. Git is one of the most popular version control programs but it is not easy for beginners. If you want to do something that deviates from these steps, consult a git reference. Once you understand the basics of the git workflow, you can use a GUI program which can combine multiple steps in a single click.

Methods Write-up for Identifying Contamination in Sequencing Reads

Chris and I devised and implemented this method to identify contaminant DNA in our HA412 454 and Illumina WGS reads.

N.B. I will update this posts with links to the scripts once I get them up on the lab’s bitbucket account, plus extra details if I think they are necessary on review.

Picard MarkDuplicates Troubleshooting

Picard is a java-based bioinformatics tools suite that handles SAM/BAM files. Chris introduced me to it, and it’s pretty handy. However, it is very particular about the SAM format, which can leave you searching the FAQs and forums for quite a while.

The MarkDuplicates tool looks for duplicate reads in your library and either flags them in your SAM/BAM file or deletes them, depending on your settings. It can also output a metrics on duplication.

If you are doing any analysis which takes coverage into account, you will want to remove PCR duplicates from your libraries so that they do not artificially inflate coverage numbers. The folks from Celera are adamant about this step, since it can help reduce complexity of genome assembly.

Reads are considered duplicates if they have the same orientation and their 5′ ends map to the same reference coordinate. Picard does not care if reads are part of a pair and either pair end maps to separate sequence entries (e.g separate scaffolds or contigs or chromosomes) in your reference fastas file. This makes it better than samtools rmdup, which is unable to handle read pairs that hit separate reference sequence entries.

Reads marked as duplicate will have the 0x400 bit set in SAM flag (column 2). To check if your read has that bit set, use the Picard Flag Decoder website. When duplicate reads are deleted, the highest quality read is kept intact in the SAM/BAM.

Rosalind: Learn Bioinformatics Online Through Problem Solving

I was introduced to Rosalind, a problem-based Bioinformatics Tutorial website, and I think it’s fantastic. I wish I knew about it when I was first starting out. You can solve the problems in any language you want. The website does not run your code. It only grades your solution dataset. There are a large number of problems on different topics, from codon-finding to protein spectrum matching. I would say the problems are geared towards the beginner, but there is enough variety that a higher level bioinformaticist would also benefit from rounding out their knowledge.

Plastid genome assembly from low coverage WGSS data

This post describes the steps I took to assemble plastid genomes from low-coverage WGSS data. An overview of the approach can be found here.

Essentially, the method involves first mapping of quality-filtered reads to a reference plastid genome, and only selecting plastome-like reads from this mapping step for subsequent de-novo assembly. For the assembly step, I used the VELVET assembler, which performs well for small genomes and is quite fast.

GBS multiplexing

GBS_mutliplexing contains 2 scripts that may be useful to you if you are using GBS data. One essentially formats GBS reads for Tassel. The other demultiplexes the reads. A readme file in there explains things in more detail.

Edited: edited the readme and added a script to convert qseq to fastq

Genotype By Sequencing (GBS) Barcodes

Here are GBS_Barcodes and adapters that we currently have in the lab for GBS, for sequencing on an Illumina machine. They were designed using the site Deena Bioinformatics.

This information came from Greg Baute’s blog and I’ve just converted the file to .xls.

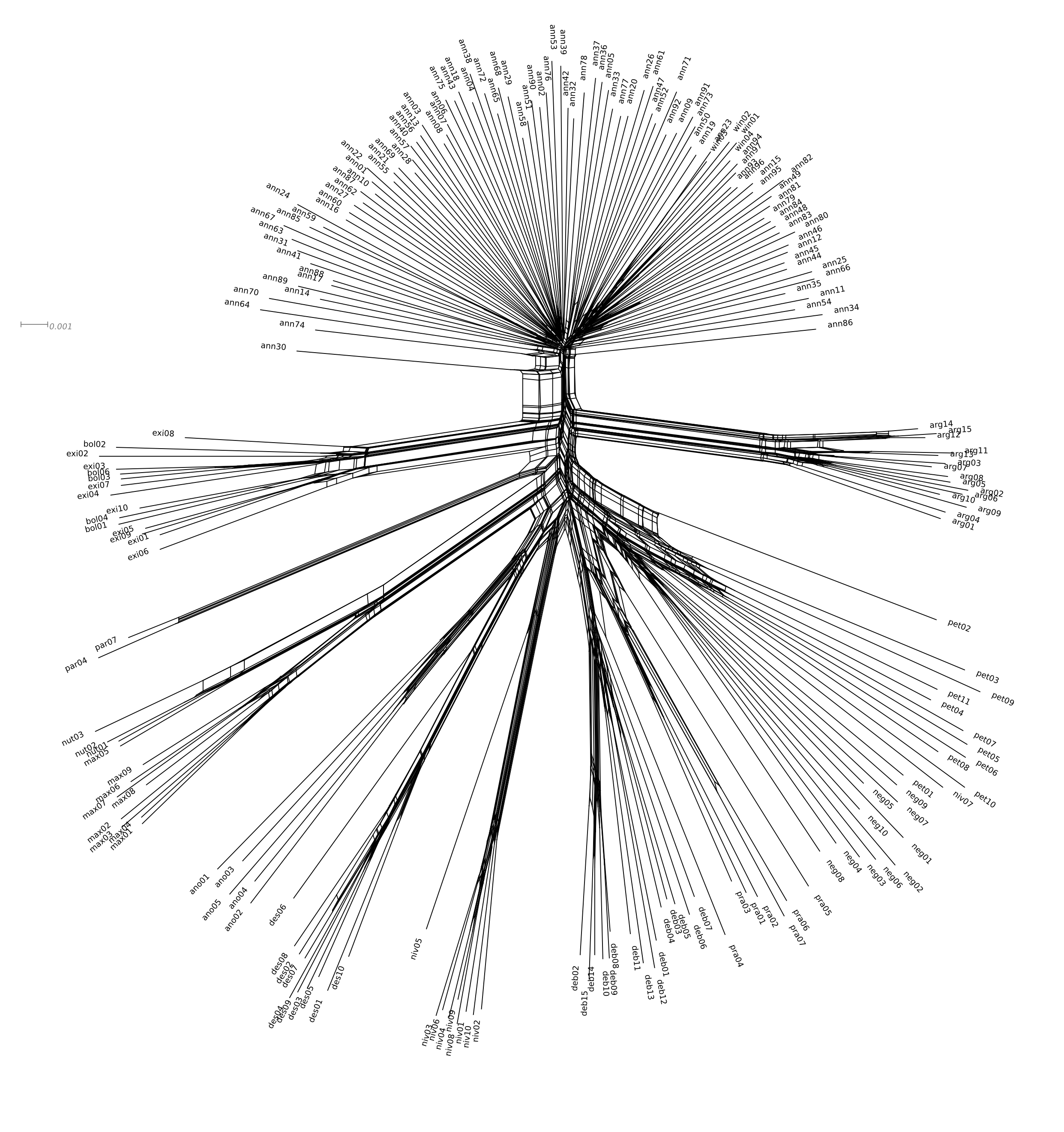

GBS phylogenetics

Here is a phylogenetic network of wild annuals. This is made from GBS data, aligned against the celera assembly. This is ~40,000 sites which have 2 alleles and <20% missing data.

Here is a excel file that describes the samples: GB_GBSdesign

The JPEG looks horrible, download it as a pdf: 213i_80m

Tab delimited under the fold: