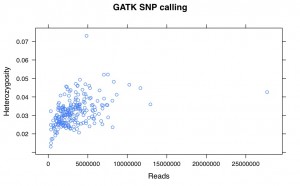

I’ve come to the realization that I’ve been making a mistake in my SNP calling using GATK. I’ve been filtering for site quality not for individual genotype quality. This is a critical difference and leads to some dubious SNPs being called.

It works like this. For each position in the genome, GATK will use all the data together to call whether an alternate allele exists. This is represented by the phred scaled QUAL score. A high QUAL score means that the alternate allele exists in in your dataset. This number is often very high if you’re analyzing a whole plate of GBS because it takes into account all the data from all the individuals. I’ve been filtering based on this number.

There is also a MQ score, which is the mapping quality for all the reads at that site.

For each individual, if there are any reads at that site GATK will output a genotype call (i.e. 0/0, 0/1, 1/1). It also outputs the number of reads supporting each allele, the QT score (which is the phred scaled probability of that the genotype call being correct) and the likelihoods of each possible genotype. When you use select variants in GATK, it filters sites but not genotypes (so you can have a site that is good, but individual genotype calls at that site that are bad).

If you use vcf-to-tab to convert your vcf to tab separated it will accept every genotype call. This means that for some individuals you will have 1 read supporting the reference and it will be called as 0/0. The QT score will be 3, which is incredibly low, but vcf-to-tab ignores that information and calls it. Therefore a proportion of your SNPs are highly questionable. This likely contributes to the heterozygote loss seen in GBS data.

Sam and Chris wrote a script that converts a vcf file to tab but also makes sure that the quality (QUAL, QT, MQ, and depth) is good. I’ll post it if they’re OK with it be up.

Edit: Here are the scripts. They are identical except one outputs in IUPAC format (AT=W, AC=M, etc) and the other outputs in the standard double nucleotide format (AA, AT, etc).vcf2vertical_dep_GATK-UG vcf2vertical_dep_GATK

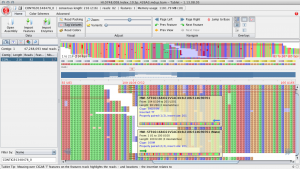

Also note that sometimes GATK will output a genotype call that looks like:

./.:.:3

While normally they should look like:

./. or 0/0:17,0:18:45:0,45,353

This is saying that there are three reads at this position, but the quality is so bad that GATK is not calling the genotype. If you have these, the scripts will throw an error but will correctly output an NN for that genotype. So if you get a normal output and many errors, it’s probably OK.